Geometric Theory

Mesh Construction Techniques

Box Modelling

Box modelling is a common way to use Maya to create a 3D representation of your concept. So, you start with the base primitive shapes; cube, cylinder, sphere, pyramid etc. Next, we can edit the mesh of the shape, by adding further subdivisions, which will give us more leeway with shaping the cube later.

Here I've added subdivisions to each the height, width and depth. Then, I can select specific faces, and use the extrusion tool to add more complexity to the mesh.

This creates more faces, edges and vertices, thus developing the mesh. Box modelling is great for producing a rough draft of your final product.

Extrusion Modelling

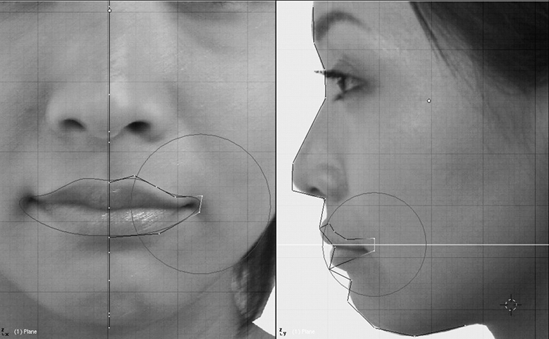

With extrusion modelling, instead of starting with a primitive shape, you would start from what would be an edge of your model, using NURBS. This gives you lots of accuracy with creating bends and curved shapes. After the lines are made, extrusion tools would be used to start forming the model around the lines you have created.

With extrusion modelling, instead of starting with a primitive shape, you would start from what would be an edge of your model, using NURBS. This gives you lots of accuracy with creating bends and curved shapes. After the lines are made, extrusion tools would be used to start forming the model around the lines you have created.Displaying 3D Models

Direct3D and OpenGL are application programming interfaces (APIs) that are used in consoles; the xbox uses Direct3D. This allows the graphics card to access greater performance through hardware acceleration. Granting more detail to a game environment, and features such as anti-aliasing and mipmapping.

Direct3D provides a specific pipeline for the work process of the GPU:

- Input Assembler

- Vertex Shader

- Hull Shader

- Tesselation stage

- Domain Shader

- Geometry Shader

- Stream Output

- Rasterizer

- Pixel Shader

- Output Merger

Of course, Direct3D and OpenGL aren't just used in video games, but anywhere that high performance graphics are required, like in simulation.

Pre-Rendering vs. Real-Time Rendering

Pre-rendering is a process typically used in the film industry, where a scene is not rendered by the playback device, but instead is a recording of a scene rendered on another machine. This allows you to use a more powerful computer (or perhaps multiple computers) to render your scene, creating much higher levels of detail with many more polygons in the scene, higher resolution textures. Of course, the downside is that it lacks interactivity, thus why it isn't used greatly in video games. Pre-rendered lightmaps allow for much more realistic lighting, however they are not convincing inside a game engine, as they won't react to changes in light very well.

Real-time rendering is done on the playback device, leaving a scene fully interactive. Due to this, it restricts the level of detail available in the scene. A lower number of polygons, and lower resolution textures, are allowed. However, as technology progresses and hardware becomes faster, our poly count is always on the rise. Lighting in real-time means that dynamic shadows can be cast, like from a torch being held by the player, for example.

.png)

.png)

.png)

.png)

No comments:

Post a Comment